Semantic search using BERT embeddings

BERT is the current state-of-art model for many NLP tasks. BERT output which is essentially context sensitive word vectors, has been used for downstream tasks like classification and NER. This is done by fine tuning the BERT model itself with very little task specific data without task specific architecture.

Semantic search is a use case for BERT where pre-trained word vectors can be used as is, without any fine tuning. Semantic search here is narrowly defined here to mean,

- finding results for an input query where the retrieved results set has candidates with no word matching any word in the input query in the top K results, but are still related/relevant to the input query. These results could precede or be interleaved with results that may in part match user input, by some ranking measure (cosine distance in examples below).

- Entering search terms that are all out of vocabulary words and retrieving results is an extreme case of the above definition. A semantic search system would still return results in contrast to a traditional string/ngram matching system which would return no results.

- This narrow definition is used to distinguish semantic search from traditional search, where each result will contain at least one or more terms of the input query in the result.

- Needless to say, these are complementary search approaches both of which are required based on use case/user intent

- We will examine the use of pre-trained BERT embeddings for semantic search. The search space could be words, phrases, sentences, documents and combinations of them. The examples below used embeddings from BERT large model

Showcase sample

Search space in the illustrated examples.

In the first example, the input is “morden music composers” ( modern misspelled as morden). Nearly half of the top 20 results 4, 5, 9, 10, 11,17,19, 20 (8/20) have no words in common with the input but are semantically related to query (except 10 — which is only weakly related — leisure). The search is done by a dot product of the vector representation of input using BERT (in this case “morden music composers”) and 70,000 BERT vector representation of sentences from a test data set. The results are then sorted on cosine distance.

Query 1: “morden music composers” — 40% of semantically related results (with varying degrees of similarity) have no words in common with input. The “__label__x” terms are human labeled tags ( 14 categories) and are rendered below to show that results are semantically related — results from the same label category categories. Those labels were not fed to the model. It is rendered below for illustrative purpose only.

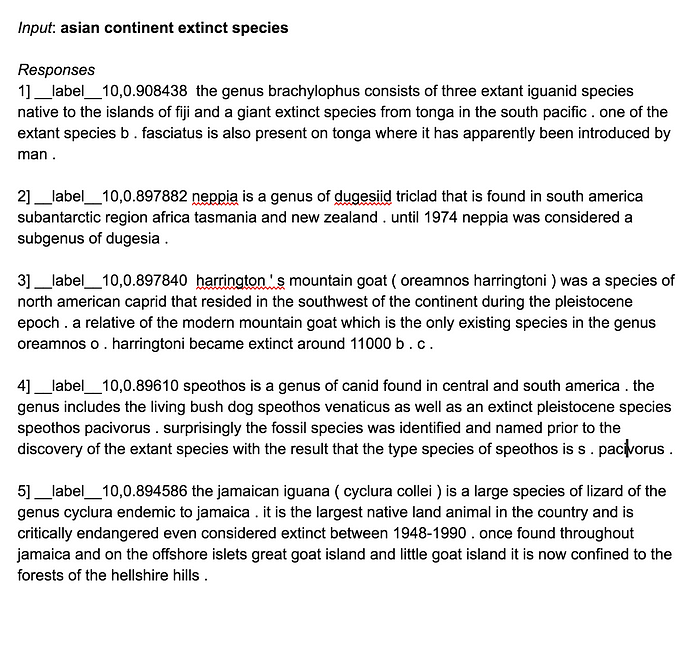

Query 2: “asian continent extinct species” — Results are mostly about species in asia. About half have no words in common with input query

Query 3: “countries where cricket is popular” — the answer is indirect but can be inferred. Result 15 is highlighted to show one case where no words in common with input.

Query 4: “extinct animals”

Query 5: “are dinosaurs related to birds”. The first result indirectly answers this question. This is one useful aspect of this search. We get functionality similar to Q&A without explicit fine tuning. This form of search is complementary to traditional search, and is more an exploration around the search terms of interest. The results are a mix of false and true positives.

Query 6: “name some rivers in china”

Query 7: “rivers in north america”

Query 8: “best selling books”

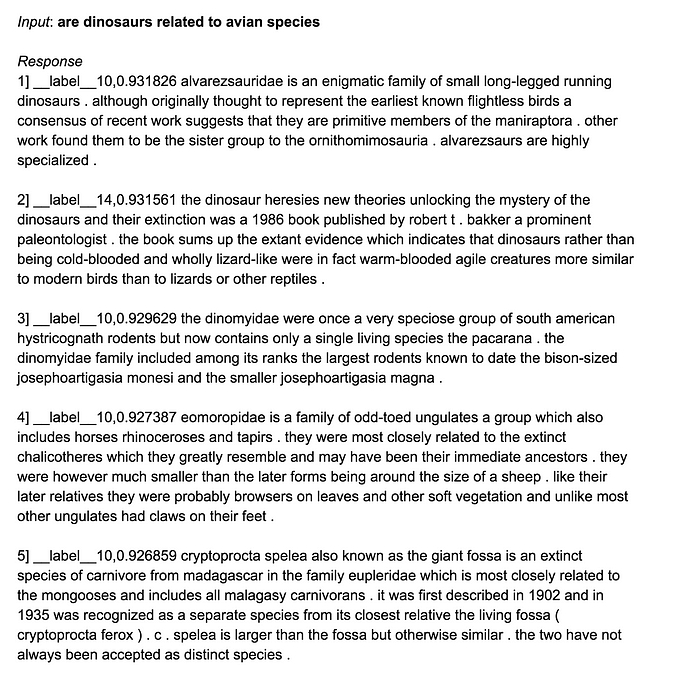

Query 9:”are dinosaurs related to avian species”. Variant of query 5. The top results qualify to be indirect answers to the query.

Query 10: “When did humans originate”. The results for this query seem to capture the sense of “when” more than the related query 11, “where did humans originate”

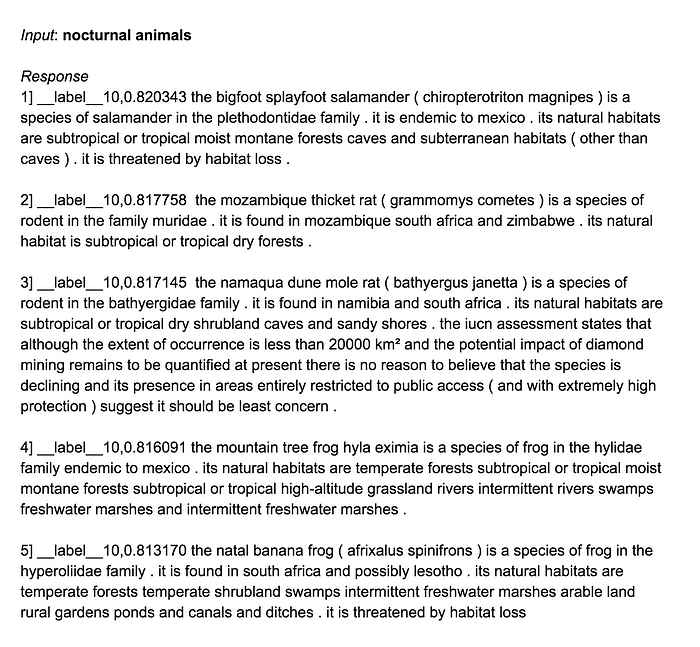

Query 11: “nocturnal animals”. Most of the top results don’t seem to be related to query (though there are relevant ones beyond top 5 — not shown) . This form of search is complementary to traditional search, and is more an exploration around the search terms of interest. The results are a mix of false and true positives.

Query 12: “non-fiction science books” The results contain both function and non-fiction combined and separately. It is a union of “fiction, non-fiction”

Query 13: “birds reptiles amphibians” Again as in Query 13, the result is a union of these three terms.

Query 14. What is the meaning of life

Query 15: What is the purpose of life. Results quite close to Query 15. Both questions — yielding responses

Anecdotally, examples such as the above seem to indicate

- BERT pre-trained embeddings can be a useful candidate for semantic search with some nice properties such as

- Free form input. However results are better when query is succinct (less false positives)

- Free form input without concern for out of vocabulary cases including due to misspellings. However results seem better without misspellings

- Rare Q&A style behavior simulation (questions yielding responses) although,

- as seen in examples above keeping the query small (few words) and succinct tends to yield more relevant responses (reduces the instances of very loosely related responses including false positives)

- results are a union of the concepts of the input query. It is not necessarily what exactly matches the input.

How are these vectors generated?

- 70,000 sentences of fasttext categorization test data was used and vectors for the words (sub words in cases of words like incarceration) in each sentence was used to generate a sentence vector by summing word vectors and dividing by number of words in a sentence. The summing excludes “CLS” and “SEP” tokens. The summing is done over all tokens that constitute a word including the word pieces a word may be broken down into. The data used for this test is from the fasttext data set to do supervised training for classifying a sentence into 14 categories. With BERT we directly use the sentences to generate vectors, and then use the labels as a measure of BERT returning semantically related results. The labels are not used in sentence vector generation. For instance, a quick scan of the above results would shows the top results are largely dominated by one label type. Even if there is a mixture, those results would be semantically similar to the input search.

- The first 32 words in a sentence are used to generate vectors for this test. The larger this value, better the results, but longer the time it takes to generate vectors. This count is the maximum number of words in a sequence after tokenization into subwords. So a word like incarceration would become three subwords — “inca, ##rce, ##ration”

- These vectors were generated using bert-as-service with CLS and SEP not being used for sentence generation. We could use extract_features.py from BERT to generate them too -it takes longer to generate it since it is single threaded.

- Using “CLS and SEP” not only adds more noise, the results are quite different — hence they are skipped. For instance a query that has the tone of a question would return responses that are also questions, as opposed to responses that are more like answers.

- Other options to consider for sentence vector generation instead of averaging is max etc.

- All the vectors used in this test used layer -2 (penultimate layer -layer below the topmost one). Other options to consider are combination of layers.

How are sentence vectors created using BERT word/subword vectors different from sentence vectors made from word vectors of a model like word2vec?

- The key difference is word2vec vectors for a word are the same regardless of context. BERT vectors are not. For example,

- The vector for the word “cell” is one vector in word2vec after training it on a corpus. The vector for “cell” depends on the context of the sentence it occurs. While we can get word vectors for a word like “cell” independent of context, adding context independent BERT word vectors would yield a sentence vector that is not the same as adding up the word vectors constituting a sentence.

- For instance, the sentence vector for “the dog chased the puppy” where we add up the vectors for “the”, “dog” “chased”, “the”,”puppy” in this sentence context, is not the same same vector as adding the vectors for the individual words “the”, “dog”,”chased”, “the”, “puppy”, without any context.

- From a semantic search perspective,

- if the search space has single words that are searchable and if our input query is a single word, word2vec on average does better than BERT — it has a diverse set of semantic neighbors not constrained to the entity type of the search input. BERT on the other hand, tends to return results that are either the same entity type as the input or results that are close from an edit distance perspective. So BERT results look less “semantic” than word2vec vectors for individual words.

- If the search space consists of phrases and sentences, and if our input query is a phrase or a sentence, BERT results are semantically closer to the input than sentences made using word2vec results.

- However, regardless of being single words, phrases, or sentences, BERT has the advantage over word2vec of returning results for any input — given its composition of words from subwords.

- While this comparison seems an unfair one given BERT model honors word sequence whereas word2vec doesn’t, this comparison was done to show that word2vec despite its simplicity has advantages in certain use cases

BERT vector properties observed in a toy data set

Observations below are on a constructed toy data vocab of size 357 consisting of single words (cat, dog etc.) phrases (blood cell, prison cell) and sentences (‘the dog chased the puppy’)

- The sense of a vector representing a word changes with the context the word appears. For instance, the word cell in the sentence “he went to prison cell with a cell phone to extract blood cell samples from the inmates”, the neighbors to the different usage of “cell” reflects the sense. For instance, the top neighbors to the “he went to prison cell with….” are words/phrases like “prison”, “incarceration” etc. whereas the top neighbors to “he went to prison cell with a cell phone to extract blood cell samples from the inmates” are terms like “blood cell”, “biological cell” etc.

- The sum of the vectors constituting individual words of a sentence is far off from the vector composed of the words taking into the word order, particularly as the length of sentence increases. For instance, the vector for “the dog chased the puppy” is far off from the vector composed by adding the individual word vectors alone without context.

- Interestingly the vector for a sentence is very close to the vector for the sentence reversed. This is regardless of the words in the sentence being composed of subwords. For instance, sentence vectors for “plants are the primary food producers in food chain” are close to “chain food in producers food primary the are plants”. This in some sense is not surprising, given the symmetry of attention. However, the senses of words are destroyed in the reverse string, perhaps because of the asymmetry of positional encoding. For instance, the different senses of the word “cell” is lost when the sentence “he went to prison cell with a cell phone to extract blood cell samples from the inmates” is reversed “inmates the from samples cell blood extract to phone cell a with cell prison to went he”

Originally published at ajitsmodelsevals.quora.com.